Tangible Media research

I worked with the Tangible Media Group led by Hiroshi Ishii at the MIT Media Lab. We explore dynamic physical media: objects that can change their physical properties like shape, texture, and stiffness in real time through interactions with their digital and physical environments. The divide between the worlds of bits and atoms is wrong; we're finding ways to merge them.

In particular I've worked with pin-based shape displays.

Coupling Static and Dynamic Shape

I first worked with Philipp Schoessler to explore the coupling of static and dynamic objects to overcome the limitations of either by itself. Real-time shape displays like ours are limited by having only vertical degrees of freedom, by their inability to form overhangs, and so on. But the display can manipulate static objects like wooden cubes to construct advanced structures and to constrain user interactions. Static objects can be deftly rotated, stacked, and horizontally translated by humans, and the shape display can augment these actions with dynamically generated physical meaning.

We published this work at UIST:

-

Kinetic Blocks - Actuated Constructive Assembly for Interaction and Display

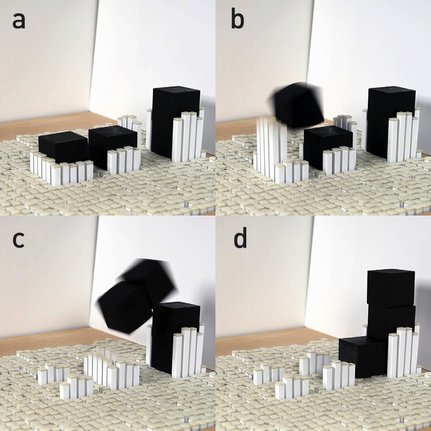

Structures can be built with various construction techniques, like catapulting.

Simulating Materiality

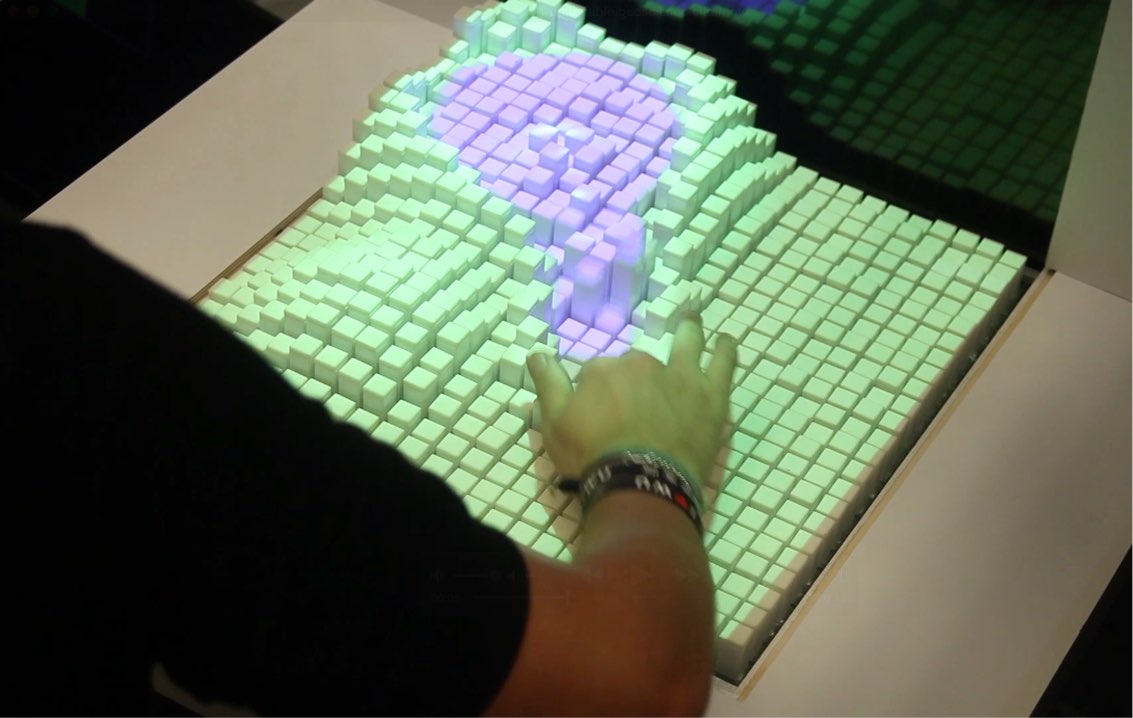

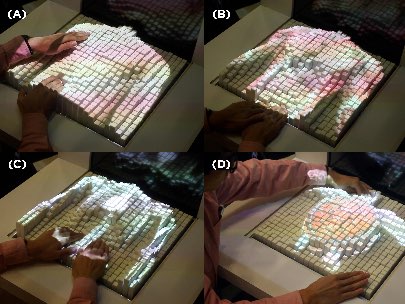

I then joined Luke Vink and Ken Nakagaki in exploring simulated materiality in touch interactions: can the shape display feel like rubber, liquid, metal, flesh? At this point I was the lead software engineer for Tangible Media.

I first sped up our work by rebuilding our shape display software stack from the ground up. The codebase was 5 years old, full of hacks, and software written for one machine was incompatible with the others. I fixed these problems and others and structured a clear, modular system. Luke, who is primarily a designer, stated, "What I love most is that I can teach this codebase to someone new, and they can go build something with it."

We all built things with it. I built half-a-dozen applications exploring shape-recording, touch-sensing, force-feedback, and proximity-sensing. I supported development of another half-dozen that summer and more since. (And by now, far more has been built without my involvement than was built with it.)

With a fourth collaborator, Jared Counts, we wrote an award-winning CHI paper:

-

Materiable: Rendering Dynamic Material Properties in Response to Direct Physical Touch with Shape Changing Interfaces

In medical contexts, this materiality aids in understanding anatomy.

Our codebases are available on GitHub. They're configured for our machines, but if it's helpful please check them out! Feel free to ping me with questions.

- omniFORM, our fresh new codebase

- inFORM_tracking, the implementations behind Shape Synthesis